Homepage for Heads-Up Computing

At Heads-Up Computing, we are driving a transformative vision in Human-Computer Interaction (HCI) and interactive computing. Discover our latest developments, research contributions, and media coverage as we shape the future of computing.

Start by exploring an enlightening article soon to appear in Communication of the ACM magazine. It defines our broad vision, fusing human cognition, artificial intelligence, and interactive technologies. This sets the stage for our groundbreaking research and innovation.

Navigate our homepage to witness our progress in realizing this vision. We push boundaries, creating intelligent systems that align with human needs, emotions, and preferences. Our researchers strive to enhance experiences, boost productivity, and foster meaningful interactions with technology.

Experience the media coverage that highlights our lab and researchers. From prominent technology publications to conferences, our work receives recognition. This showcases the impact and significance of our contributions and the growing importance of Heads-Up Computing in HCI.

Explore articles, publications, and project showcases that offer insights into our world of Heads-Up Computing. Stay updated with our latest breakthroughs and the ongoing pursuit of excellence in HCI research. Join us on this transformative journey.

Welcome to Heads-Up Computing, where technology and human interaction converge to shape computing experiences.

CACM VIDEO

Prof. Shengdong Zhao discusses “Heads-Up Computing: Moving Beyond the Device-Centered Paradigm,” a Research Article in the September 2023 CACM.

CACM Article

Heads-Up Computing: Moving Beyond the Device-Centered Paradigm

Authors: Zhao Shengdong, Felicia Tan, Katherine Fennedy

This article introduces our vision for a new interaction paradigm: “Heads-Up Computing”, a concept involving the provision of seamless computing support for human’s daily activities. Its synergistic and user-centric approach frees humans from common constraints caused by existing interactions (e.g. smartphone zombies), made possible by matching input and output channels between the device and human. Wearable embodiments include a head- and hand-piece device which enable multimodal interactions and complementary motor movements. While flavors of this vision have been proposed in many research fields and in broader visions like UbiComp, Heads-Up Computing offers a holistic vision focused on the scope of the immediate perceptual space that matters most to users, and establishes design constraints and principles to facilitate the innovation process. We illustrate a day in the life with Heads-Up to inspire future applications and services that can significantly impact the way we live, learn, work, and play.

CCS Concepts: • Human-centered computing → Interaction paradigms; Interaction devices.

Additional Key Words and Phrases: heads-up, human-centered, paradigm

ACM Reference Format:

Zhao Shengdong, Felicia Tan, and Katherine Fennedy. 2023. Heads-Up Computing: Moving Beyond the Device-Centered Paradigm. In. ACM, New York, NY, USA, 11 pages. https://doi.org/10.1145/3571722

Humans have come a long way in our co-evolution with tools. Well-designed tools effectively expand our physical as well as mental capabilities [38 ], and the rise of computers in our recent history has opened up possibilities like never before. The Graphical User Interface (GUI) of the 1970s revolutionized desktop computing; traditional computers with text-based command-line interfaces evolved into an integrated everyday device, the personal computer (PC). Similarly, the mobile interaction paradigm introduced in the 1990s transformed how information can be accessed anytime and anywhere with a single hand-held device. Never before have we had so much computing power in the palm of our hands.

The question “Do our tools really complement us, or are we adjusting our natural behavior in order to accommodate our tools?” highlights a key design challenge associated with digital interaction paradigms. For example, we accommodate desktop computers by physically constraining ourselves to the desk. This has, amongst other undesirable consequences, encouraged sedentary lifestyles [ 25 ]and poor eyesight [ 32 ]. While smartphones do not limit mobility, they encourage

users to adopt unnatural behavior such as the head-down posture [ 27]. Users look down at their hand-held devices and pay little attention to their immediate environment. This ‘smartphone zombie’ phenomenon has unfortunately led to an alarming rise in pedestrian accidents [39].

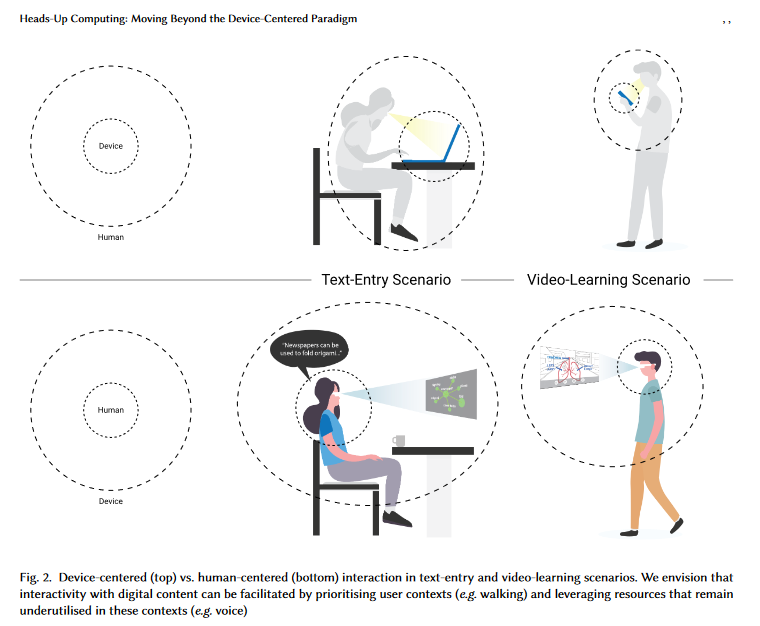

Although there are obvious advantages to the consolidated smartphone hardware form, this ‘centralization’ also means that users receive all inputs and outputs (visual display, sound production, haptic vibration) from a single physical point (the phone). In addition, smartphones keep our hands busy; users interact with the device by holding it and performing gestures such as typing, tapping, and swiping. The eyes- and hands-busy nature of mobile interactions limits how users can engage with other activities, and has been shown to be intrusive, uncomfortable, and disruptive [ 31 ]. Could we re-design computing devices to more seamlessly support our daily activities? Above all, can we move beyond the device-centered paradigm and into a more human-centered vision, where tools can better complement

natural human capabilities, instead of the other way around (see Fig. 2)?

While user-centered design [ 23 ] has been introduced for decades, our observations are that everyday human-computer interactions have not aligned with this approach and its goals. In the next sections, we discuss our understanding of what placing humans at the center stage entails, related works, and how Heads-Up computing may inspire future applications and services that can significantly impact how we live, learn, work, and play.

1 HUMANS AT THE CENTER STAGE

1.1 Understanding the human body and activities

The human body comprises both input and output (I/O) channels to perceive the world around us. Common human-computer interactions involve the use of our hands to click on a mouse or tap a phone screen, or our eyes to read from a computer screen. However, the hands and eyes are also essential for performing daily activities such as cooking and exercising. When device interactions are performed simultaneously with these primary tasks, competition for I/O resources is introduced [24]. As a result, current computing activities are performed either separately from our daily activities (e.g. work in an office, live elsewhere) or in an awkward combination (e.g. typing and walking like a smartphone zombie). While effective support of multitasking is a complex topic, and in many cases, not possible, computing activities can still be more seamlessly integrated with our daily lives if the tools are designed using a human-centric approach. By carefully considering resource availability, i.e. the amount of resources available for each I/O channel in the context of the user’s environment and activity, devices could better distribute task loads by leveraging underutilized natural resources and lessening the load on overutilized modalities. This is especially true for scenarios involving so-called multi-channel multitasking [ 8]: in which one of the task is largely automatic (e.g. routine manual tasks such as walking, washing dishes, etc.).

To design for realistic scenarios, we look into Dollar [6]’s categorization of Activities of Daily Living (ADL), which provides a taxonomy of crucial daily tasks (albeit originally created for older adults and rehabilitating patients). The ADL

categories range from domestic (e.g. office presentation), extradomestic (e.g. shopping), and physical self-maintenance (e.g. eating), providing sufficient representation of what the general population engages in every day. It is helpful to select examples from this broad range of activities when learning about resource demands. We can analyze an example activity for its hands- and eyes-busy nature, identify underutilized/overutilized resources, then select opportunistic

moments for the system to interact with the user. For example, where the primary activity requires the use of hands but not the mouth and ears (e.g. when a person is doing laundry), it may be more appropriate for the computing system to prompt the user to reply to a chat message via voice instead of thumb-typing. But if the secondary task requires a significant mental load, e.g. composing a project report, the availability of alternative resources may not be sufficient to support multitasking. Thus, it is important to identify secondary tasks that not only can be facilitated by underutilised resources, but also minimal overall cognitive load that are complementary to the primary tasks.

To effectively manage resources for activities of daily living and digital interactions, we refer to the theory of multitasking. According to Salvucci et al. [30], multitasking involves several core components (ACT-R cognitive architecture, threaded cognition theory, and memory-for-goals theory). Multiple tasks may appear concurrently or

sequentially, depending on the amount of time a person spends on one task before switching to another. For concurrent multitasking, tasks are harder to perform when they require the same resources. They are easier to implement if multiple resource types are available [37]. In the case of sequential multitasking, users switch back and forth between the primary and secondary tasks over a longer period of time (minutes to hours). Reducing switching costs and facilitating the rehearsal of the ‘problem representation’ [ 1] can significantly improve multitasking performance. Heads-Up computing is explicitly designed to take advantage of these theoretical insights: 1) its voice + subtle gesture interaction method relies on available resources during daily activities; 2) its heads-up optical head-mounted see-through display (OHMD)

also facilitates quicker visual attention switches.

Overall, we envision a more seamless integration of devices into human life by first considering the human’s resource availability, primary/ secondary task requirements, then resource allocation.

1.2 Existing traces of human-centered approach

Existing designs like the Heads-Up Display (HUD) and smart glasses exemplify the growing interest in human-centered innovation. A HUD is any transparent display that can present information without requiring the operator to look away from their main field of view [35 ]. HUD in the form of a windshield display has become increasingly popular in the automotive industry [ 3 ]. Studies have shown that it can reduce reaction times to safety warnings and minimize the time drivers spend looking away from the road [7]. This application ensures the safety of vehicle operators. On the other hand, smart glasses can be seen as a wearable HUD that offers additional hands-free capabilities through voice commands. The wearer does not have to adjust their natural posture to the device; instead, a layer of digital information is superimposed upon the wearer’s vision via the glasses. While these are promising ideas, their current usage is focused more on resolving particular problems and is not designed to be integrated into human’s general and daily activities.

Beyond devices and on the other end of the spectrum, we find general-purpose paradigms like Ubiquitous Computing (UbiComp), which also paints a similar human-centered philosophy but involves a very broad design space. Conceptualized by Weiser [36], UbiComp aims to transform physical spaces into computationally active, context-aware, and intelligent environments via distributed systems. Designing within the UbiComp paradigm has led to the rise of tangible and embodied interaction [28], which focuses on the implications and new possibilities for interacting with computational objects within the physical world [13 ]. These approaches understand that technology should not overburden human activities and that computer systems should be designed to detect and adapt to changes in human behavior that naturally occur. However, the wide range of devices, scenarios, and physical spaces (e.g. ATM spaces) means that there is much freedom to create all kinds of design solutions. This respectable vision has a broad scope and does not define how it can be implemented. Thus, we observe the need for an alternative interaction paradigm with a more focused scope. Its vision integrates threads of similar ideas that currently exist as fragments in the human-computer interaction (HCI) space.

We introduce Heads-Up Computing, a wearable platform-based interaction paradigm for which the ultimate goal of seamless and synergistic integration with everyday activities can be fulfilled. Heads-Up Computing focuses only on the users’ immediate perceptual space. At any given time, the space in which the human can perceive through his/her senses is what we refer to as the perceptual space. The specified form i.e. hardware and software of Heads-Up Computing, provides a solid foundation to guide future implementations, effectively putting humans at center stage.

2 THE HEADS-UP COMPUTING PARADIGM

Three main characteristics define Heads-Up Computing: 1) body-compatible hardware components, 2) multimodal voice and gesture interaction, and 3) resource-aware interaction model. Its overarching goal is to offer a more seamless, just-in-time, and intelligent computing support for humans’ daily activities.

2.1 Body-compatible hardware components

To address the shortcomings of device-centered design, Heads-Up computing will distribute the input and output modules of the device to match the human input and output channels. Leveraging the fact that our head and hands are the two most important sensing & actuating hubs, Heads-Up computing introduces a quintessential design that comprises two main components: the head-piece and the hand-piece. In particular, smart glasses and earphones will directly provide visual and audio output to the human eyes and ears. Likewise, a microphone will receive audio input from humans, while a hand-worn device (i.e. ring, wristband) will be used to receive manual input. Note that while we advocate the importance of a hand-piece. Current smartwatches and smartphones are not designed within the principle of Heads-Up computing, as they require the user to adjust their head and hand position to interact with the device, thus are not synergistic enough with our daily activities. Our current implementation of a hand-piece consists of a tiny ring mouse can be worn on the index finger to serve as a small trackpad for controlling a cursor, as demonstrated by EYEditor [11] and Focal [33]. It provides a relatively rich set of gestures to work with, which can provide manual input for smart glasses. While this is a base setup, many additional capabilities can be integrated into the smart glasses (e.g. eye-tracking [21], emotion-sensing [ 12]) and the ring mouse (e.g. multi-finger gesture sensing, vibration output) for more advanced interactions. For individuals with limited body functionalities, Heads-Up computing can be custom designed to redistribute the input and output channels to the person’s available input/output capabilities. For example, in the case of visually impaired individuals, the Heads-Up platform can focus on the capability of audio output with the earphone, and haptic input from the ring mouse to make it easier for them to access digital information in everyday living. Heads-Up computing exemplifies a potential design paradigm for the next generation, which necessitates a style of interaction highly compatible with natural body capabilities in diverse contexts.

2.2 Multimodal voice + gesture interaction

Similar to hardware components, every interaction paradigm also introduces new interactive approaches and interfaces. With a head- and hand-piece in place, users would have the option to input commands through various modalities: gaze, voice, and gestures of the head, mouth, and fingers. But given the technical limitations [17 ], like being error-prone, requiring frequent calibration, and involving obtrusive hardware, it seems like only voice and finger gestures are currently the more promising modalities to be exploited for Heads-Up computing. As mentioned previously, voice modality is an underutilized input method that 1) is convenient, 2) allows us to free up both hands and vision, potentially, for doing another activity simultaneously, 3) is faster, and 4) is more intuitive. However, one of its drawbacks is that voice input can be inappropriate in noisy environments and sometimes socially awkward to perform [16]. Hence, it has become more important than ever to consider how users could exploit subtle gestures to employ less effort for input and generally do less. In fact, a recent preliminary study [ 31] revealed that thumb-index-finger gestures could offer an overall balance and were preferred as a cross-scenario subtle interaction technique. More studies need to be conducted to maximize the synergy of finger gestures or other subtle interaction designs during everyday scenarios. For now, the complementary voice and gestural input method is a good starting point to support Heads-Up computing. It has been demonstrated by EYEditor [11], which facilitates on-the-go text-editing by using 1) voice to insert and modify text and 2) finger gestures to select and navigate the text. When compared to standard smartphone-based solutions, participants could correct text significantly faster while maintaining a higher average walking speed with EYEditor. Overall, we are optimistic about the applicability of multimodal voice + gesture interaction across many hand-busy scenarios and the general active lifestyle of humans.

2.3 Resource-aware interaction model

The final piece of Heads-Up Computing is its software framework. This framework allows the system to understand when to use which human resource.

Firstly, the ability to sense and recognize ADL is made possible by applying deep learning approaches to audio [ 18 ] and visual [ 22 ] recordings. The head- and hand-piece configuration can be embedded with wearable sensors to infer the status of both the user and the environment. For instance, is the user stationary, walking, or running? What are the noise levels and lighting levels of the space occupied by the user? These are essential factors that could influence users’ ability to take in information. In the context of on-the-go video learning, Ram and Zhao [26] recommended visual information be presented serially, persistently, and against a transparent background, to better distribute users’ attention between learning and walking tasks. But more can be done to investigate the effects of various mobility speeds [10] on performance and preference of visual presentation style. It is also unclear how audio channels can be used to offload visual processing. Subtler forms of output like haptic feedback can also be used for low priority message notifications [29], or remain in the background of primary tasks [34].

Secondly, the resource-aware system integrates feedforward concepts [5 ] when communicating to users. It presents what commands are available and how they can be invoked. While designers may want to minimize visual clutter on the smart glasses, it is also important that relevant head- and hand-piece functions are made known to users. To manage this, the system needs to assess resource availability for each human input channel in any particular situation. For instance, to update a marathon runner about his/her physiological status, the system should sense if finger gestures or audio commands are optimal, and the front-end interface dynamically configures its interaction accordingly. Previous works primarily explored feedforward for finger/hand gestural input [ 9, 14 ], but to the best of our knowledge, none have yet to address this growing need for voice input.

An important area of expansion for the Heads-Up paradigm is its quantitative model, one that could optimize interactions with the system by predicting the relationship between human perceptual space constraints and primary tasks. Such a model will be responsible for delivering just-in-time information to and from the head- and hand-piece. We hope future developers can leverage essential back-end capabilities through this model as they write their applications. The resource-aware interaction model holds great potential for research expansion, and presents exciting opportunities for Heads-Up technology of the future.

3 A DAY IN THE LIFE WITH HEADS-UP COMPUTING

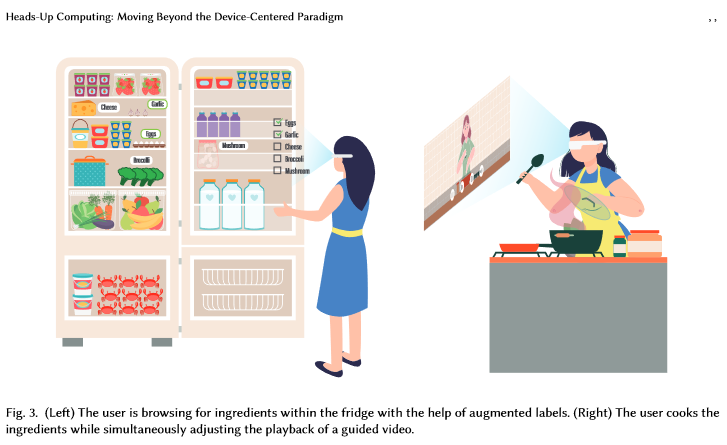

Beth is a mother of two who works from home. She starts her day by preparing breakfast for the family. Today, she sets out to cook a new dish, the broccoli frittata. With the help of a Heads-Up computing virtual assistant named Tom, Beth voices out, “Hey Tom, what are the ingredients for broccoli frittata?” Tom renders an ingredient checklist on Beth’s smart glasses. Through the smart glasses’ front camera, Tom sees what Beth sees, and detects that she is scanning the fridge. This intelligent sensing prompts Tom to update the checklist collaboratively with Beth as she removes

each ingredient from the fridge and places it on the countertop, occasionally glancing at her see-through display to double-check that each item matches. With advanced computer vision and Augmented Reality (AR) capabilities, Beth can even request Tom to annotate where each ingredient is located within her sight. Once all the ingredients have been identified, Beth proceeds with the cooking. Hoping to be guided with step-by-step instructions, she speaks out: “Hey Tom, show me how to cook the ingredients.” Tom searches for the relevant video on YouTube and automatically cuts it into step-wise segments, playing the audio through the wireless earset and video through the display. Beth toggles the ring mouse she is wearing to jump forward or backward from the video. Despite requiring both hands for cooking,

she can utilize her idle thumb to control the playback of the video tutorial simultaneously. Tom’s in-time assistance seamlessly adapts to Beth’s dynamic needs and constraints without referring to her remote phone, which would pause her task progress.

Beth finishes with the cooking and feeds her kids, during which she receives an email from her work supervisor, asking for her available timing for an emergency meeting. Based on Beth’s previous preferences, Tom understands that Beth values quality time with her family and prevents her from being bombarded by notifications from work or social groups during certain times of the day. However, she makes an exception for messages labeled as ‘emergency.’ Like an intelligent observer, Tom adjusts information delivery to Beth by saying, “You have just received an emergency email from George Mason. Would you like me to read it out?”. Beth can easily vocalize “Yes” or “No” based on what suits her. By leveraging the idle ears and mouth, Heads-Up computing allows Beth to focus her eyes and hands on what matters

more in that context: her family.

Existing voice assistants such as Amazon Alexa, Google Assistant, Siri from Apple, and Samsung Bixby have gained worldwide popularity for the conversational interaction style that they offer. They can be defined as “software agents that are powered by artificial intelligence and assist people with information searches, decision-making efforts or executing certain tasks using natural language in a spoken format” [ 15 ]. Despite allowing users to multitask and work hands-free, the usability of current speech-based systems still varies greatly [ 40 ]. These voice assistants currently do not achieve the depth of personalization and integration that Heads-Up computing can achieve given its narrower focus on the users’ immediate perceptual space and a clearly defined form i.e. hardware and software.

The story above depicts a system that leverages visual, auditory, and movement-based data from a distributed range of sensors on the user’s body. It adopts a first-person view as it collects and analyzes contextual information (e.g. the camera on the glasses sees what the user sees and the microphone on the headpiece hears what the user hears). It leverages the resource-aware interaction model to optimize the allocation of Beth’s bodily resources based on the constraints of her activities. The relevance and richness of data collected from the user’s immediate environment, coupled with the processing capability, allows the system to anticipate and to calculate quantitative information ahead of the user. Overall, we envision that Tom will be a human-like agent, able to interact with and assist humans. From cooking to commuting, we believe that providing just-in-time assistance has the potential to transform relationships between devices and humans, thereby improving the way we live, learn, work, and play.

4 FUTURE OF HEADS-UP COMPUTING

Global technology giants like Meta, Google, and Microsoft have invested a considerable amount in the development of wearable AR [2 ]. The rising predicted market value of AR smart glasses [ 19 ] highlights the potential of such interactive platforms. As computational and human systems continue to advance, design, ethical, and socio-economical design challenges will also evolve. We recommend the paper by Mueller et al . [20], which presents a vital set of challenges relating to Human-Computer Integration. These include compatibility with humans, effects on human society, identity, and behavior. In addition, Lee and Hui [17] effectively sum up interaction challenges specific to smart glasses and their implications on multi-modal input methods, all of which are relevant to the Heads-Up paradigm.

At the point of writing, there remains a great deal of uncertainty around wearable technology regulation globally. Numerous countries have no regulatory framework, whereas existing frameworks in other countries are being actively refined [4]. For the promise of wearable technology to be fully realized, we share the hope that different stakeholders — theorists, designers, and policymakers — collaborate to drive this vision forward and into a space of greater social acceptability. As a summary of the Heads-Up Computing vision, we flesh out the following key points:

- Heads-Up Computing is interested in moving away from device-centred computing and instead, placing humans at the centre stage. We envision a more synergistic integration of devices into the human’s daily activities by first considering the human’s resource availability, primary and secondary task requirements, as well as resource allocation.

- Heads-Up Computing is a wearable platform-based interaction paradigm. Its quintessential body-compatible hardware components comprise a head- and hand-piece. In particular, smart glasses and earphones will directly provide visual and audio output to the human eyes and ears. Likewise, a microphone will receive audio input

from humans, while a hand-worn device (i.e. ring, wristband) will be used to receive manual input. - Heads-Up Computing utilizes multimodal I/O channels to facilitate multi-tasking scenarios. Voice input and thumb-index-finger gestures are examples of interactions that have been explored as part of the paradigm.

- The resource-aware interaction model is the software framework of Heads-Up Computing, which allows the system to understand when to use which human resource. Factors such as whether the user is in a noisy place can influence their ability to absorb information. Thus, the Heads-Up system aims to sense and recognize the user’s immediate perceptual space. Such a model will predict human perceptual space constraints and primary task engagement, then deliver just-in-time information to and from the head- and hand-piece.

- A highly seamless, just-in-time, and intelligent computing system has the potential to transform relationships between devices and humans, and there is a wide variety of daily scenarios for which the Heads-Up vision can translate and benefit. Its evolution is inevitably tied to the development of head-mounted wearables, as this emergent platform makes its way into the mass consumer market.

When queried about the larger significance of the Heads-Up vision, the authors reflect on a regular weekday in their lives — 8 hours spent in front of a computer and another 2 hours on the smartphone. Achievements in digital productivity come too often at the cost of being removed from the ‘real world’. What wonderful digital technology humans have come to create, perhaps the most significant in the history of our co-evolution with tools. Could computing systems be so well-integrated that it not only supports but enhances our experience of physical reality? The ability to straddle both worlds – the digital and non-digital one – is increasingly pertinent, and we believe it is time for a shift in paradigm. We invite individuals and organisations to join us in our journey to design for more seamless computing support, improving the way future generations live, learn, work and play.

REFERENCES >> https://dl.acm.org/doi/10.1145/3571722

For more details on related studies, please refer to our publication section.

Media

CNA NEWS FEATURE

Prof. Zhao Shengdong shares more about the Heads-Up Computing vision with CNA Insider – “Metaverse: What The Future Of Internet Could Look Like”

Official lab Heads-Up video

Presentation Slides

Our slides contain more information on the NUS-HCI Lab and Heads-Up Vision are available for download here.

CACM Magazine Article

Our heads-up computing CACM article is now live.

Our Topics

Our Heads-Up research directions include foundational topics as well as their applications. We expect this to evolve and grow over time. Below, we include some examples of current work:

Notifications

Present notifications effectively on OHMD to minimize distractions to primary tasks (e.g. notification display for conversational settings)

Resource interaction model

Establish how different situations affect our ability to in-take information on different input channels. Understand what input methods are comfortable and non-intrusive to users’ everyday tasks.

Monitoring Attention

Monitor attention state fluctuations continuously and reliably via EEGs (e.g. during video-learning scenarios)

Education & Learning

Design learning videos on OHMD for on-the-go situations, effectively distributing users’ attention between learning and walking tasks. Support microlearning in mobile scenarios (mobile information acquisition).

Healthcare & Wellness

Facilitate OHMD-based mindfulness practice that is highly accessible, convenient, and easy for novice practitioners to implement in casual everyday settings

Text Presentation

Explore text display design factors for optimal information consumption on OHMDs (e.g. in mobile environments)

Developer/Research tools for Heads-Up applications

Provide testing and development tools to help researchers and developers build Heads-Up applications, streamlining their work process (e.g. learning video-builder)

Past Publications

Recent publications

Given Heads-Up is a new paradigm, how do users input?

- We propose a voice + gesture multimodal interaction paradigm, in which voice input is mainly responsible for input text; voice + gesture input from wearable hand controllers (i.e., interactive ring)

- Voice-based text input and editing. When users are engaged in daily activities, their eyes and hands are often busy; thus we believe voice input is a better modality for inputting text information for Heads-Up computing. However, we don’t just input text, editing is a big part of text processing. Editing text using voice alone is known to be a very challenging problem. Eyeditor is a solution we have come up with to support mobile voice-based text editing. It uses voice re-dictation to correct text, and a wearable ring mouse to perform finer adjustment to text.

- EYEditor: On-the-Go Heads-up Text Editing Using Voice and Manual Input

- While the paper above is about text input using voice input in general, we also share an application scenario on how to write about one’s experience in an in-situ fashion using voice-based multimedia input – LiveSnippets: Voice-Based Live Authoring of Multimedia Articles about Experiences

- Eyes-free touch-based text input as a complementary input technique. In case, voice-based text input is not convenient (in places in which quietness is required), we also have a technique (in collaboration with Tsinghua University) to allow you to type in text in an eyes-free fashion (not in the sense of don’t have a visual display, but rather, it does not need users to look at the keyboard to input the text. This allows the user to maintain a heads-up, hands-down posture while input text into a smart glasses display.

- Blindtype: Eyes-Free Text Entry on Handheld Touchpad by Leveraging Thumb’s Muscle Memory

- Interactive ring as complimentary input technique for command selection and spatial referencing. Voice input has its limitations, as some information is inherently spatial. We also need a device that can perform simple selection as well as 2D or 3D pointing operations. However, it’s not known what’s the best way for users to perform such operations in a variety of daily scenarios. While there might be different interaction techniques that are considered most optimal for different scenarios, users are unlikely willing to carry multiple devices or learn multiple techniques on a daily basis, so what will be the best cross-scenario device/technique to perform command selection and spatial referencing? We conducted a series of experiments to evaluate different alternatives that can perform synergistic interactions under the Heads-Up computing scenarios, and we found that an interactive ring stands-out as the best cross-scenario input technique for selection and spatial referencing for Heads-Up computing. Refer to the following paper for more details

- Ubiquitous Interactions for Heads-Up Computing: Users’ Preferences for Subtle Interaction Techniques in Everyday Settings

HOW TO OUTPUT?

The goal of Heads-Up computing is to support users’ current activity with just-in-time, intelligent support, either in the form of digital content or potentially physical help (i.e., using robots). When users are engaged in an existing task, presenting information to users will ultimately lead to multi-tasking scenarios. While multitasking with simple information is easier, in some cases, the best information support might be in the form of dynamic information, so one question arises is how to best present dynamic information to users in multi-tasking scenarios. After a series of studies, we have come up with a presentation style that’s more suitable for displaying dynamic information to users called LSVP. We have also investigated the use of paracentral and near-peripheral vision for information presentation on OHMDs/smart-glasses during in-person social interactions. Read the following paper for more details.

- LSVP: On-the-Go Video Learning Using Optical Head-Mounted Displays

- Paracentral and near-peripheral visualizations: Towards attention-maintaining secondary information presentation on OHMDs during in-person social interactions

Older publications on wearable solutions and multimodal interaction techniques that are foundational to Heads-Up computing:

Tools, Guides and Datasets

- Video Dataset: OHMD-based Studies or Video Learning Experiments

This is the video dataset used in our study “LSVP: Towards Effective On-the-go Video Learning Using Optical Head-Mounted Displays”. The folder contains video materials that can be used to conduct video learning-based experiments on Optical Head-Mounted Displays (OHMDs or smart glasses) OHMDs or any within-subject design in general.

- GitHub source code: Progress notifications on OHMD

This source code contains OHMD progress bar notifications (Progress bar types: Circular, Textual, and Linear) used in our study “Paracentral and near-peripheral visualizations: Towards attention-maintaining secondary information presentation on OHMDs during in-person social interactions”. It includes notification triggering implementation (Python) and UI implementation (Unity).

- GitHub source code: Eyeditor

This includes the text editing tool used in our study “EYEditor: Towards On-the-Go Heads-Up Text Editing Using Voice and Manual Input”. It utilizes voice and manual input methods (via a ring mouse).

- GitHub source code: HeadsUp Glass

This is an Android app framework for smart-glasses.

NOTE: You may also refer to the NUS-HCI lab’s overall Github repository.

Kindly cite the original studies when using these resources for your research paper.

- Guidance for Controlling Xiaomi’s IoT devices using python

This is a guide for using python to control Xiaomi’s IoT project. Sample codes will be released after the related paper publishing.

Main Participants

We thank many others who have collaborated with us on our projects in some capacity or other.